Apply Occam’s razor to your bug bounty hunting. Cutaway all that is unnecessary. Reduce to the essential. Simplify to what is important and ignore the rest.

On the surface, the web is a complicated place. For bug bounty hunters, most of it is just noise. A distraction from what is truly important. If you’re fuzzing inputs with an automated or semi-automated scanner, the more inputs and effective payloads you have to play with the more likely you are to find a bug. Otherwise, aim to simplify everything and manually test what remains.

Why You Should Simplify Requests When Bug Bounty Hunting

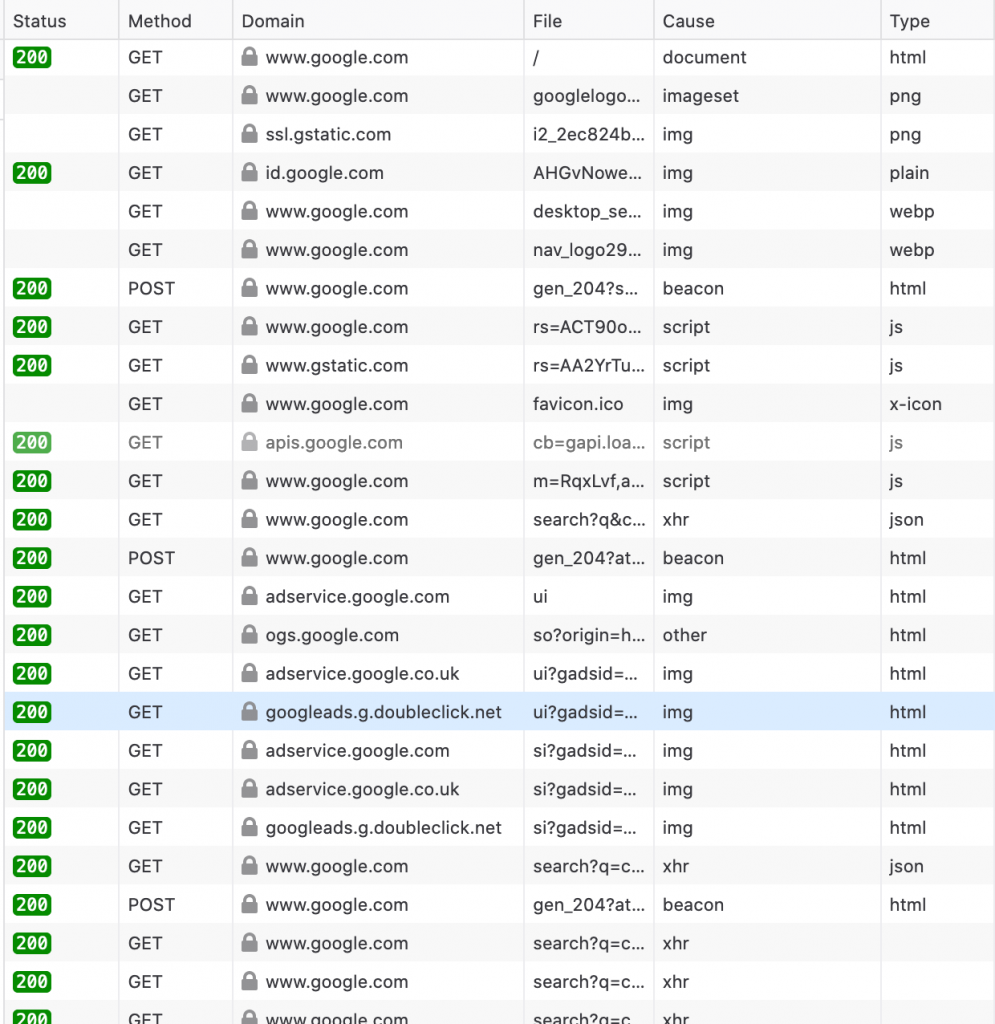

When you explore the web through a browser it makes tens or hundreds of HTTP requests for every page you visit, downloading HTML, stylesheets, javascript files, images, videos, and more. As an example, a simple Google search in a browser can take 58 HTTP requests to perform a single search and render the results.

This is because modern web applications are bloated. Seriously bloated. If you look at the requests and responses of even the most basic web application, you’ll see numerous additional requests to private and third-party APIs, media resources, ad services and more. Each request is stuffed with cookies, headers, and parameters that add nothing to the functionality of the application. Analytics tracking codes, state cookies that are never used, unnecessary headers, and even input parameters from old code that no longer does anything. You’ll find it all.

While it makes the user experience much better, all of this noise makes it difficult to see what is really going on behind the scenes. If you’re testing for anything above the low-hanging fruit then gaining clarity and understanding of what is happening is key. Only by filtering out the noise can we hear the signal.

What Can Be Simplified?

As a bug bounty hunter, you’ll need to explore a web application with a browser to gain an understanding of what it’s trying to achieve. With that knowledge, you can reduce everything to the bare minimum components. I always try to reduce the following as much as possible:

- The number of HTTP or WebSocket requests to perform an action

- The number of headers, cookies, and input variables in each request

An action could be anything from a 1-click password reset email, to completing a 7-page, stateful, web form with client-side and server-side state manipulation. How you classify a single action isn’t important. It’s about asking ‘what is the bare minimum I need to do to achieve X’.

An Example – A Simple Google Search

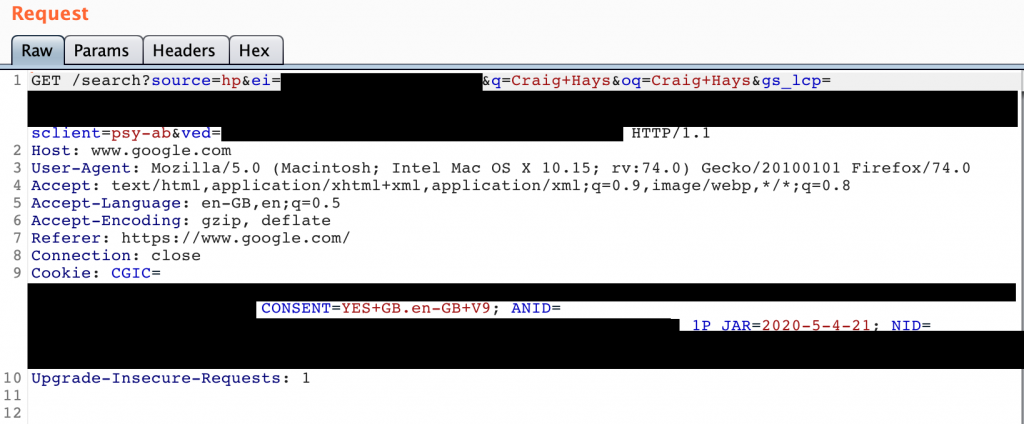

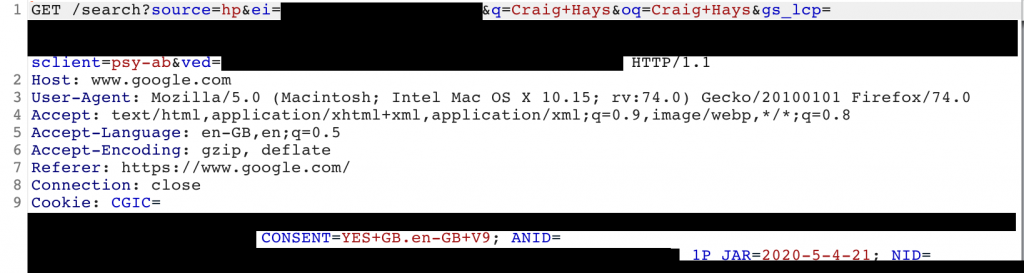

A Google search for my name (vain, I know…) should be simple. In theory, it only needs two things: the search query, ‘Craig Hays’, and a call to the action to perform the search. The reality is that for brand new, unauthenticated users, a simple Google search looks like this:

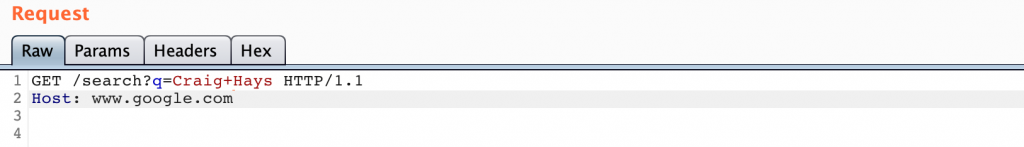

Look at all of those headers, URL parameters, and cookies! I can strip away almost all of that and still get a valid response. In fact, I only need two lines in order to get an answer.

The rest of the request is about tracking what you’re doing and formatting the response from the server. You can do this with almost any application online. 90% of the content in each request can be dropped without problems, if not more.

How I Simplify Web Requests

My approach to doing this is equally as simple.

Identify Key Transactions

The first thing I do after running through an action a couple of times in a browser is to manually review each of the HTTP requests from the Burp HTTP proxy log and identify those that match what I was doing. If it was a search, I look for the completed search terms. If I completed in a form, I look for the form data. You get the idea.

If it’s not obvious by looking in the proxy logs, I switch on intercept mode and watch the requests in real-time as they are made. If I still can’t work it out, I start dropping requests out of the process until it breaks, then I repeat until I’ve removed everything I can. The majority of transactions are just a single HTTP request with a few analytics or media requests either side. It is less common for actions to require 2 or more distinct, client-less requests unless a redirect is taking place.

Common Headers and Cookies

With the smallest number of HTTP requests possible, I send each request to Burp Repeater for per-request simplification. First I strip out all of the things I recognise as common headers and cookies that are present on most sites. With experience, you quickly realise that any ‘_ga=GA…’ cookies are Google Analytics tracking codes and the application works just fine without them. JSESSIONID, on the other hand, is a java generated session identifier and without it, the app may not remember who you are.

An ‘Authorization: Bearer’ header will log you out if removed (or it should). Cutting things out is also a valid form of security testing. If you remove all of the session cookies and you can still access a resource, that’s a bug. If you take out the CSRF token and it still works, that’s probably another bug. There’s value in cutting away the excess beyond the outcome. With simplicity comes understanding and insight.

Context-Based Guesses

Based on the names and values of each Key: Value pair in the request, I take a guess at what they probably mean. For example, looking again at the first google query:

- GET /search?source=hp – I’m pretty sure that hp stands for homepage as I just came from there.

- &q=Craig+Hays is a short form of &query=<the think I just typed>

- &oq=Craig+Hays is the original query I typed and Google didn’t feel a need to auto-correct for me

- gs_lcp is probably Google Search something, something, something…

As I go through each one, if it doesn’t look important I strip it out, retesting each time in Burp Repeater.

Trial and Error

Lastly, I simply remove headers, cookies, and parameters one by one until the application breaks then I put the required parameter in. Every breaking change tells me more about the way the underlying application works. Anything that doesn’t cause an error wasn’t needed and can be disregarded completely from future testing.

The Result

At the end of this process I have the following:

- The Minimum Viable Interaction required with the web application to achieve the desired outcome

- A greater understanding of how the application works and which inputs do what

- A smaller, more focused attack surface to test against

- The option to add back in what was stripped away to test the effects of changing one variable at a time.

In Summary

Use this technique as another tool in your toolkit but don’t feel like you need to do it all of the time. There’s nothing wrong with testing in depth. You’ll miss simple bugs if you never look at the bigger picture just as much as you’ll miss deep bugs if you never narrow your focus. Strip away what isn’t essential to learn the minimum inputs for an application and use that knowledge in the rest of your testing. If you toggle inputs you’ll reach a level of understanding where you could code the application yourself from scratch. That’s the true goal of what we’re aiming for here.

View other posts in the Bug Bounty Hunting Tips series

Leave a Reply